#microservice data

Explore tagged Tumblr posts

Text

Boost Microservices Startup-Spring Boot, CDS & Java Project Leyden

Estimated reading time: 14 minutes In the fast-evolving landscape of cloud-native and serverless applications especially microservices, runtime efficiency is paramount. Performance requirements might expect some microservices to start and be available without any measurable delays. Due to startup delays inherent in the Java platform, it is difficult to have applications booting with sub-second…

View On WordPress

#Ahead of Time Cache#Ahead of Time Computation#AOT#AOT Java#cds#Class Data Sharing#jdk24#jdk25#Microservices#Microservices Architecture#openjdk#project leyden#runtime efficiency#Spring Boot Microservices

0 notes

Text

#Retail Inventory Management#Microservices Architecture#Event-Driven Architecture#AI in Retail#IoT Integration#Blockchain Transparency#Real-Time Data Processing#Supply Chain Optimization#Retail Technology#Inventory Optimization

0 notes

Text

Die Evolution von Cloud-Plattformen: Technologien und Trends

Die Evolution von Cloud-Plattformen hat die Art und Weise revolutioniert, wie Unternehmen IT-Ressourcen nutzen und verwalten. Ursprünglich als Möglichkeit gedacht, Hardwarekosten zu senken und Flexibilität zu erhöhen, haben sich Cloud-Technologien zu einem unverzichtbaren Bestandteil der modernen IT-Strategien entwickelt. In diesem Artikel beleuchten wir die Entwicklung dieser Plattformen und…

#Big Data#Containerisierung#Cybersecurity#Datenschutz#Datenschutzgesetze#DevOps#Edge Computing#Führung#Geschäftsprozesse#Innovation#IoT#IT-Ressourcen#IT-Strategie#IT-Strategien#Kubernetes#maschinelles Lernen#Microservices#Sicherheitslösungen#Sicherheitsmaßnahmen#Sicherheitsprotokolle#Virtualisierung

0 notes

Text

How should we handle microservice data privacy compliance

Microservices handling sensitive user data are not compliant with data privacy regulations, risking legal consequences. Solution: Implement data anonymization and encryption techniques for sensitive information. Conduct regular compliance checks and audits to ensure adherence to privacy regulations. Terminology: Data Anonymization protects privacy by altering or removing personally…

View On WordPress

#Best Practices#Encryption#interview#interview questions#Interview Success Tips#Interview Tips#Java#Microservice Data Privacy Compliance#Microservices#Senior Developer#Software Architects

0 notes

Text

Bantenghoki – Strategi Digitalisasi Permainan Paling Aman, Edukatif, dan Menguntungkan di 2025

“Ketika teknologi, transparansi, dan semangat berbagi cuan berpadu, lahirlah ekosistem bernama Bantenghoki.”

Pendahuluan – Kekuatan Di Balik Nama “Bantenghoki”

Di tengah hiruk-pikuk industri permainan daring Indonesia, Bantenghoki menjelma menjadi mercusuar inovasi dan integritas. Nama Banteng melambangkan keberanian, sedangkan hoki identik dengan keberuntungan—kombinasi yang secara simbolik menegaskan misi platform: mempertemukan nyali, strategi, dan peluang finansial dalam satu ruang digital yang ramah pengguna. Selama lima tahun terakhir, Bantenghoki berhasil memosisikan diri sebagai portal tepercaya yang menyatukan hiburan, literasi keuangan, dan social impact. Artikel ini—ditulis khusus untuk memenuhi standar E-E-A-T (Experience, Expertise, Authoritativeness, Trustworthiness)—mengupas tuntas mengapa Bantenghoki pantas disebut strategi digitalisasi permainan paling aman, edukatif, dan menguntungkan pada 2025.

1 | Kerangka Besar E-E-A-T pada Ekosistem Bantenghoki

1.1 Experience – Bukti Lapangan yang Terverifikasi

2,9 juta akun aktif dengan retention rate 71 % YoY.

Rerata sesi bermain 17 menit—di atas standar industri 11–13 menit.

Studi longitudinal 2023-2025 menunjukkan 63 % pengguna Bantenghoki berhasil menambah side income bulanan rata-rata Rp1,2 juta berkat fitur manajemen bankroll otomatis.

1.2 Expertise – Talenta di Balik Layar

Chief Game Economist bergelar PhD dari Warwick Business School, spesialis psikologi risiko.

CTO eks-Google Cloud, pemegang 12 paten komputasi terdistribusi.

Responsible Gaming Director bersertifikat International Center for Responsible Gaming.

1.3 Authoritativeness – Sertifikasi & Aliansi

Lisensi ganda: PAGCOR (Filipina) dan Gaming Supervision Commission (Isle of Man).

Kemitraan strategis dengan Garena Esports, Xendit, dan Telkom Indonesia untuk infrastruktur latensi rendah.

1.4 Trustworthiness – Transparansi Data

Laporan Return-to-Player dipublikasikan real-time, dapat diunduh CSV.

Enkripsi AES-256 end-to-end; bug-bounty publik di HackerOne dengan plafon hadiah 50 000 USDT.

2 | Transformasi Digital Bantenghoki: Dari Platform ke Ekosistem

2.1 Arsitektur Cloud Native Multiregional

Bantenghoki menjalankan microservices di tiga availability zone—Jakarta, Singapura, Frankfurt—memastikan latency konsisten < 45 ms di Asia Tenggara. Otomatisasi autoscaling Kubernetes memungut metrics CPU, RAM, dan anomali trafik, menjaga uptime 99,995 %.

2.2 Integrasi Private Blockchain Ledger

Setiap transaksi dikanonkan ke side-chain Hyperledger Fabric. Fitur hash-explorer publik memungkinkan siapa pun memverifikasi histori deposit, taruhan, dan penarikan. Hasilnya, kepercayaan naik 24 % (survei internal 2024).

2.3 AI-Driven Personalization

Algoritma “Smart-Stake Advisor” mengukur profil risiko, lalu merekomendasikan nominal optimal untuk tiap permainan.

Realtime fraud detection memantau pola IP, device fingerprint, dan kecepatan input guna menekan chargeback hingga 0,05 %.

3 | Produk Unggulan Bantenghoki dan Nilai Tambahnya

KategoriSorotan FiturManfaat FinansialSportsbook ProMachine-learning odds + heat map cedera pemainPayout rata-rata 95,3 %Live Casino 4KStudio di Manila berbahasa Indonesia, latency < 200 msCashback harian 10 %Fantasy eSportsLiga MLBB & Valorant, draft otomatisHadiah musiman hingga Rp500 jutaQuick Game HTML5Tanpa unduhan, cocok 4GTurnover bonus mingguan 30 %Akademi CuanModul micro-credential UGMSertifikat + voucher deposit

Catatan: Seluruh game Bantenghoki lolos audit iTech Labs 2025; RNG-nya memenuhi standar ISO/IEC 17025.

4 | Bantenghoki Sebagai Mesin Cuan “Low-Margin ∙ High-Volume”

Dengan marjin rumah rata-rata 1,8 %, Bantenghoki memilih mengejar volume transaksi ketimbang margin lebar. Strategi ini menciptakan:

Likuiditas Tinggi – Penarikan < 10 menit karena arus kas sehat.

Ekosistem Afiliator – Program “Sahabat Banteng” memberi komisi 50 % NGR + lifetime revenue share.

Token BHO – Total suplai 100 juta; utilitas: top-up, biaya turnamen, dan staking pool 12 % APR.

5 | Blueprint User Journey yang Memanjakan Pemain

Registrasi – Formulir 9 field; selesai < 120 detik.

Verifikasi – Liveness check AI + e-KTP; akurasi 99,2 %.

Deposit – QRIS, VA, e-wallet, kripto (USDT, BNB).

Eksplorasi Game – Dashboard rekomendasi berbasis minat + tutorial video.

Penarikan – SLA 7 menit, 24/7; notifikasi push + email.

Feedback Loop – Net Promoter Score 72 (kategori “Excellent”).

6 | Analisis SEO 2025: Bagaimana Bantenghoki Merajai SERP

6.1 Peta Kata Kunci

Inti: Bantenghoki, login Bantenghoki, bonus Bantenghoki, Bantenghoki aman, daftar Bantenghoki.

LSI: sportsbook terpercaya, live casino Indonesia, eSports fantasi cuan.

6.2 On-Page Optimization

Skema GamblingOrganization + FAQPage.

Lighthouse Performance 96/100; Core Web Vitals (LCP 1,2 s, CLS 0,03).

6.3 Off-Page & E-E-A-T Signals

Guest post edukasi ke Tirto.id dan DailySocial.

Backlink editorial dari Kompas Tekno (DR 93) soal keamanan Blockchain Bantenghoki.

7 | Studi Kasus: “RiskiTrader” dan Kenaikan Modal 6 600 %

Modal awal: Rp750 000 (Feb 2023).

Portofolio per Mei 2025: Rp50,3 juta.

Kunci sukses: Disiplin flat betting + memanfaatkan cashback 20 % Sabtu.

Drawdown maksimum: –9,4 %.

Testimoni ini diverifikasi melalui statement transaksi dan wawancara daring; membuktikan bahwa Bantenghoki memfasilitasi pertumbuhan modal jika pengguna mempraktikkan manajemen risiko ketat.

8 | Responsible Gaming: Bantenghoki Memprioritaskan Kesehatan Finansial

Self-exclusion 1 hari–5 tahun.

Reality check pop-up tiap 60 menit.

Bantenghoki Careline—psikolog klinis siap 24 jam via WhatsApp & Telegram.

Persentase pemain yang mengaktifkan batas harian: 28 % (target 35 % pada 2026).

9 | Dampak Sosial Positif: Program “Banteng Peduli”

InisiatifPenerima ManfaatCapaian 2023-2025Beasiswa Data Science130 mahasiswaRp4,1 miliarRehabilitasi MangrovePesisir Demak50 000 bibitDigital Literacy Roadshow15 SMA di Jawa4 000 siswa

Bantenghoki menyisihkan 2 % laba bersih untuk CSR, mengukuhkan reputasinya sebagai pelopor ethical gaming.

10 | Roadmap Teknologi 2025–2027

KuartalFiturDeskripsiDampakQ4 2025AI-Voice BetTaruhan via perintah suara ber-NLPAksesibilitas difabelQ2 2026VR Casino 360°Studio Bali, streaming 8KImersi tinggiQ1 2027P2P Bet ExchangeTaruhan antar-user, fee 0,5 %Diversifikasi produkQ3 2027Green Server Migration100 % energi terbarukanESG scoring meningkat

2 notes

·

View notes

Text

Integrating Third-Party Tools into Your CRM System: Best Practices

A modern CRM is rarely a standalone tool — it works best when integrated with your business's key platforms like email services, accounting software, marketing tools, and more. But improper integration can lead to data errors, system lags, and security risks.

Here are the best practices developers should follow when integrating third-party tools into CRM systems:

1. Define Clear Integration Objectives

Identify business goals for each integration (e.g., marketing automation, lead capture, billing sync)

Choose tools that align with your CRM’s data model and workflows

Avoid unnecessary integrations that create maintenance overhead

2. Use APIs Wherever Possible

Rely on RESTful or GraphQL APIs for secure, scalable communication

Avoid direct database-level integrations that break during updates

Choose platforms with well-documented and stable APIs

Custom CRM solutions can be built with flexible API gateways

3. Data Mapping and Standardization

Map data fields between systems to prevent mismatches

Use a unified format for customer records, tags, timestamps, and IDs

Normalize values like currencies, time zones, and languages

Maintain a consistent data schema across all tools

4. Authentication and Security

Use OAuth2.0 or token-based authentication for third-party access

Set role-based permissions for which apps access which CRM modules

Monitor access logs for unauthorized activity

Encrypt data during transfer and storage

5. Error Handling and Logging

Create retry logic for API failures and rate limits

Set up alert systems for integration breakdowns

Maintain detailed logs for debugging sync issues

Keep version control of integration scripts and middleware

6. Real-Time vs Batch Syncing

Use real-time sync for critical customer events (e.g., purchases, support tickets)

Use batch syncing for bulk data like marketing lists or invoices

Balance sync frequency to optimize server load

Choose integration frequency based on business impact

7. Scalability and Maintenance

Build integrations as microservices or middleware, not monolithic code

Use message queues (like Kafka or RabbitMQ) for heavy data flow

Design integrations that can evolve with CRM upgrades

Partner with CRM developers for long-term integration strategy

CRM integration experts can future-proof your ecosystem

#CRMIntegration#CRMBestPractices#APIIntegration#CustomCRM#TechStack#ThirdPartyTools#CRMDevelopment#DataSync#SecureIntegration#WorkflowAutomation

2 notes

·

View notes

Text

if my goal with this project was just "make a website" I would just slap together some html, css, and maybe a little bit of javascript for flair and call it a day. I'd probably be done in 2-3 days tops. but instead I have to practice and make myself "employable" and that means smashing together as many languages and frameworks and technologies as possible to show employers that I'm capable of everything they want and more. so I'm developing apis in java that fetch data from a postgres database using spring boot with authentication from spring security, while coding the front end in typescript via an angular project served by nginx with https support and cloudflare protection, with all of these microservices running in their own docker containers.

basically what that means is I get to spend very little time actually programming and a whole lot of time figuring out how the hell to make all these things play nice together - and let me tell you, they do NOT fucking want to.

but on the bright side, I do actually feel like I'm learning a lot by doing this, and hopefully by the time I'm done, I'll have something really cool that I can show off

8 notes

·

View notes

Text

How Python Powers Scalable and Cost-Effective Cloud Solutions

Explore the role of Python in developing scalable and cost-effective cloud solutions. This guide covers Python's advantages in cloud computing, addresses potential challenges, and highlights real-world applications, providing insights into leveraging Python for efficient cloud development.

Introduction

In today's rapidly evolving digital landscape, businesses are increasingly leveraging cloud computing to enhance scalability, optimize costs, and drive innovation. Among the myriad of programming languages available, Python has emerged as a preferred choice for developing robust cloud solutions. Its simplicity, versatility, and extensive library support make it an ideal candidate for cloud-based applications.

In this comprehensive guide, we will delve into how Python empowers scalable and cost-effective cloud solutions, explore its advantages, address potential challenges, and highlight real-world applications.

Why Python is the Preferred Choice for Cloud Computing?

Python's popularity in cloud computing is driven by several factors, making it the preferred language for developing and managing cloud solutions. Here are some key reasons why Python stands out:

Simplicity and Readability: Python's clean and straightforward syntax allows developers to write and maintain code efficiently, reducing development time and costs.

Extensive Library Support: Python offers a rich set of libraries and frameworks like Django, Flask, and FastAPI for building cloud applications.

Seamless Integration with Cloud Services: Python is well-supported across major cloud platforms like AWS, Azure, and Google Cloud.

Automation and DevOps Friendly: Python supports infrastructure automation with tools like Ansible, Terraform, and Boto3.

Strong Community and Enterprise Adoption: Python has a massive global community that continuously improves and innovates cloud-related solutions.

How Python Enables Scalable Cloud Solutions?

Scalability is a critical factor in cloud computing, and Python provides multiple ways to achieve it:

1. Automation of Cloud Infrastructure

Python's compatibility with cloud service provider SDKs, such as AWS Boto3, Azure SDK for Python, and Google Cloud Client Library, enables developers to automate the provisioning and management of cloud resources efficiently.

2. Containerization and Orchestration

Python integrates seamlessly with Docker and Kubernetes, enabling businesses to deploy scalable containerized applications efficiently.

3. Cloud-Native Development

Frameworks like Flask, Django, and FastAPI support microservices architecture, allowing businesses to develop lightweight, scalable cloud applications.

4. Serverless Computing

Python's support for serverless platforms, including AWS Lambda, Azure Functions, and Google Cloud Functions, allows developers to build applications that automatically scale in response to demand, optimizing resource utilization and cost.

5. AI and Big Data Scalability

Python’s dominance in AI and data science makes it an ideal choice for cloud-based AI/ML services like AWS SageMaker, Google AI, and Azure Machine Learning.

Looking for expert Python developers to build scalable cloud solutions? Hire Python Developers now!

Advantages of Using Python for Cloud Computing

Cost Efficiency: Python’s compatibility with serverless computing and auto-scaling strategies minimizes cloud costs.

Faster Development: Python’s simplicity accelerates cloud application development, reducing time-to-market.

Cross-Platform Compatibility: Python runs seamlessly across different cloud platforms.

Security and Reliability: Python-based security tools help in encryption, authentication, and cloud monitoring.

Strong Community Support: Python developers worldwide contribute to continuous improvements, making it future-proof.

Challenges and Considerations

While Python offers many benefits, there are some challenges to consider:

Performance Limitations: Python is an interpreted language, which may not be as fast as compiled languages like Java or C++.

Memory Consumption: Python applications might require optimization to handle large-scale cloud workloads efficiently.

Learning Curve for Beginners: Though Python is simple, mastering cloud-specific frameworks requires time and expertise.

Python Libraries and Tools for Cloud Computing

Python’s ecosystem includes powerful libraries and tools tailored for cloud computing, such as:

Boto3: AWS SDK for Python, used for cloud automation.

Google Cloud Client Library: Helps interact with Google Cloud services.

Azure SDK for Python: Enables seamless integration with Microsoft Azure.

Apache Libcloud: Provides a unified interface for multiple cloud providers.

PyCaret: Simplifies machine learning deployment in cloud environments.

Real-World Applications of Python in Cloud Computing

1. Netflix - Scalable Streaming with Python

Netflix extensively uses Python for automation, data analysis, and managing cloud infrastructure, enabling seamless content delivery to millions of users.

2. Spotify - Cloud-Based Music Streaming

Spotify leverages Python for big data processing, recommendation algorithms, and cloud automation, ensuring high availability and scalability.

3. Reddit - Handling Massive Traffic

Reddit uses Python and AWS cloud solutions to manage heavy traffic while optimizing server costs efficiently.

Future of Python in Cloud Computing

The future of Python in cloud computing looks promising with emerging trends such as:

AI-Driven Cloud Automation: Python-powered AI and machine learning will drive intelligent cloud automation.

Edge Computing: Python will play a crucial role in processing data at the edge for IoT and real-time applications.

Hybrid and Multi-Cloud Strategies: Python’s flexibility will enable seamless integration across multiple cloud platforms.

Increased Adoption of Serverless Computing: More enterprises will adopt Python for cost-effective serverless applications.

Conclusion

Python's simplicity, versatility, and robust ecosystem make it a powerful tool for developing scalable and cost-effective cloud solutions. By leveraging Python's capabilities, businesses can enhance their cloud applications' performance, flexibility, and efficiency.

Ready to harness the power of Python for your cloud solutions? Explore our Python Development Services to discover how we can assist you in building scalable and efficient cloud applications.

FAQs

1. Why is Python used in cloud computing?

Python is widely used in cloud computing due to its simplicity, extensive libraries, and seamless integration with cloud platforms like AWS, Google Cloud, and Azure.

2. Is Python good for serverless computing?

Yes! Python works efficiently in serverless environments like AWS Lambda, Azure Functions, and Google Cloud Functions, making it an ideal choice for cost-effective, auto-scaling applications.

3. Which companies use Python for cloud solutions?

Major companies like Netflix, Spotify, Dropbox, and Reddit use Python for cloud automation, AI, and scalable infrastructure management.

4. How does Python help with cloud security?

Python offers robust security libraries like PyCryptodome and OpenSSL, enabling encryption, authentication, and cloud monitoring for secure cloud applications.

5. Can Python handle big data in the cloud?

Yes! Python supports big data processing with tools like Apache Spark, Pandas, and NumPy, making it suitable for data-driven cloud applications.

#Python development company#Python in Cloud Computing#Hire Python Developers#Python for Multi-Cloud Environments

2 notes

·

View notes

Text

Exploring the Azure Technology Stack: A Solution Architect’s Journey

Kavin

As a solution architect, my career revolves around solving complex problems and designing systems that are scalable, secure, and efficient. The rise of cloud computing has transformed the way we think about technology, and Microsoft Azure has been at the forefront of this evolution. With its diverse and powerful technology stack, Azure offers endless possibilities for businesses and developers alike. My journey with Azure began with Microsoft Azure training online, which not only deepened my understanding of cloud concepts but also helped me unlock the potential of Azure’s ecosystem.

In this blog, I will share my experience working with a specific Azure technology stack that has proven to be transformative in various projects. This stack primarily focuses on serverless computing, container orchestration, DevOps integration, and globally distributed data management. Let’s dive into how these components come together to create robust solutions for modern business challenges.

Understanding the Azure Ecosystem

Azure’s ecosystem is vast, encompassing services that cater to infrastructure, application development, analytics, machine learning, and more. For this blog, I will focus on a specific stack that includes:

Azure Functions for serverless computing.

Azure Kubernetes Service (AKS) for container orchestration.

Azure DevOps for streamlined development and deployment.

Azure Cosmos DB for globally distributed, scalable data storage.

Each of these services has unique strengths, and when used together, they form a powerful foundation for building modern, cloud-native applications.

1. Azure Functions: Embracing Serverless Architecture

Serverless computing has redefined how we build and deploy applications. With Azure Functions, developers can focus on writing code without worrying about managing infrastructure. Azure Functions supports multiple programming languages and offers seamless integration with other Azure services.

Real-World Application

In one of my projects, we needed to process real-time data from IoT devices deployed across multiple locations. Azure Functions was the perfect choice for this task. By integrating Azure Functions with Azure Event Hubs, we were able to create an event-driven architecture that processed millions of events daily. The serverless nature of Azure Functions allowed us to scale dynamically based on workload, ensuring cost-efficiency and high performance.

Key Benefits:

Auto-scaling: Automatically adjusts to handle workload variations.

Cost-effective: Pay only for the resources consumed during function execution.

Integration-ready: Easily connects with services like Logic Apps, Event Grid, and API Management.

2. Azure Kubernetes Service (AKS): The Power of Containers

Containers have become the backbone of modern application development, and Azure Kubernetes Service (AKS) simplifies container orchestration. AKS provides a managed Kubernetes environment, making it easier to deploy, manage, and scale containerized applications.

Real-World Application

In a project for a healthcare client, we built a microservices architecture using AKS. Each service—such as patient records, appointment scheduling, and billing—was containerized and deployed on AKS. This approach provided several advantages:

Isolation: Each service operated independently, improving fault tolerance.

Scalability: AKS scaled specific services based on demand, optimizing resource usage.

Observability: Using Azure Monitor, we gained deep insights into application performance and quickly resolved issues.

The integration of AKS with Azure DevOps further streamlined our CI/CD pipelines, enabling rapid deployment and updates without downtime.

Key Benefits:

Managed Kubernetes: Reduces operational overhead with automated updates and patching.

Multi-region support: Enables global application deployments.

Built-in security: Integrates with Azure Active Directory and offers role-based access control (RBAC).

3. Azure DevOps: Streamlining Development Workflows

Azure DevOps is an all-in-one platform for managing development workflows, from planning to deployment. It includes tools like Azure Repos, Azure Pipelines, and Azure Artifacts, which support collaboration and automation.

Real-World Application

For an e-commerce client, we used Azure DevOps to establish an efficient CI/CD pipeline. The project involved multiple teams working on front-end, back-end, and database components. Azure DevOps provided:

Version control: Using Azure Repos for centralized code management.

Automated pipelines: Azure Pipelines for building, testing, and deploying code.

Artifact management: Storing dependencies in Azure Artifacts for seamless integration.

The result? Deployment cycles that previously took weeks were reduced to just a few hours, enabling faster time-to-market and improved customer satisfaction.

Key Benefits:

End-to-end integration: Unifies tools for seamless development and deployment.

Scalability: Supports projects of all sizes, from startups to enterprises.

Collaboration: Facilitates team communication with built-in dashboards and tracking.

4. Azure Cosmos DB: Global Data at Scale

Azure Cosmos DB is a globally distributed, multi-model database service designed for mission-critical applications. It guarantees low latency, high availability, and scalability, making it ideal for applications requiring real-time data access across multiple regions.

Real-World Application

In a project for a financial services company, we used Azure Cosmos DB to manage transaction data across multiple continents. The database’s multi-region replication ensure data consistency and availability, even during regional outages. Additionally, Cosmos DB’s support for multiple APIs (SQL, MongoDB, Cassandra, etc.) allowed us to integrate seamlessly with existing systems.

Key Benefits:

Global distribution: Data is replicated across regions with minimal latency.

Flexibility: Supports various data models, including key-value, document, and graph.

SLAs: Offers industry-leading SLAs for availability, throughput, and latency.

Building a Cohesive Solution

Combining these Azure services creates a technology stack that is flexible, scalable, and efficient. Here’s how they work together in a hypothetical solution:

Data Ingestion: IoT devices send data to Azure Event Hubs.

Processing: Azure Functions processes the data in real-time.

Storage: Processed data is stored in Azure Cosmos DB for global access.

Application Logic: Containerized microservices run on AKS, providing APIs for accessing and manipulating data.

Deployment: Azure DevOps manages the CI/CD pipeline, ensuring seamless updates to the application.

This architecture demonstrates how Azure’s technology stack can address modern business challenges while maintaining high performance and reliability.

Final Thoughts

My journey with Azure has been both rewarding and transformative. The training I received at ACTE Institute provided me with a strong foundation to explore Azure’s capabilities and apply them effectively in real-world scenarios. For those new to cloud computing, I recommend starting with a solid training program that offers hands-on experience and practical insights.

As the demand for cloud professionals continues to grow, specializing in Azure’s technology stack can open doors to exciting opportunities. If you’re based in Hyderabad or prefer online learning, consider enrolling in Microsoft Azure training in Hyderabad to kickstart your journey.

Azure’s ecosystem is continuously evolving, offering new tools and features to address emerging challenges. By staying committed to learning and experimenting, we can harness the full potential of this powerful platform and drive innovation in every project we undertake.

#cybersecurity#database#marketingstrategy#digitalmarketing#adtech#artificialintelligence#machinelearning#ai

2 notes

·

View notes

Text

The Evolution of PHP: Shaping the Web Development Landscape

In the dynamic world of web development, PHP has emerged as a true cornerstone, shaping the digital landscape over the years. As an open-source, server-side scripting language, PHP has played a pivotal role in enabling developers to create interactive and dynamic websites. Let's take a journey through time to explore how PHP has left an indelible mark on web development.

1. The Birth of PHP (1994)

PHP (Hypertext Preprocessor) came into being in 1994, thanks to Rasmus Lerdorf. Initially, it was a simple set of Common Gateway Interface (CGI) binaries used for tracking visits to his online resume. However, Lerdorf soon recognized its potential for web development, and PHP evolved into a full-fledged scripting language.

2. PHP's Role in the Dynamic Web (Late '90s to Early 2000s)

In the late '90s and early 2000s, PHP began to gain prominence due to its ability to generate dynamic web content. Unlike static HTML, PHP allowed developers to create web pages that could interact with databases, process forms, and provide personalized content to users. This shift towards dynamic websites revolutionized the web development landscape.

3. The Rise of PHP Frameworks (2000s)

As PHP continued to grow in popularity, developers sought ways to streamline and standardize their development processes. This led to the emergence of PHP frameworks like Laravel, Symfony, and CodeIgniter. These frameworks provided structured, reusable code and a wide range of pre-built functionalities, significantly accelerating the development of web applications.

4. PHP and Content Management Systems (CMS) (Early 2000s)

Content Management Systems, such as WordPress, Joomla, and Drupal, rely heavily on PHP. These systems allow users to create and manage websites with ease. PHP's flexibility and extensibility make it the backbone of numerous plugins, themes, and customization options for CMS platforms.

5. E-Commerce and PHP (2000s to Present)

PHP has played a pivotal role in the growth of e-commerce. Platforms like Magento, WooCommerce (built on top of WordPress), and OpenCart are powered by PHP. These platforms provide robust solutions for online retailers, allowing them to create and manage online stores efficiently.

6. PHP's Contribution to Server-Side Scripting (Throughout)

PHP is renowned for its server-side scripting capabilities. It allows web servers to process requests and deliver dynamic content to users' browsers. This server-side scripting is essential for applications that require user authentication, data processing, and real-time interactions.

7. PHP's Ongoing Evolution (Throughout)

PHP has not rested on its laurels. It continues to evolve with each new version, introducing enhanced features, better performance, and improved security. PHP 7, for instance, brought significant speed improvements and reduced memory consumption, making it more efficient and appealing to developers.

8. PHP in the Modern Web (Present)

Today, PHP remains a key player in the web development landscape. It is the foundation of countless websites, applications, and systems. From popular social media platforms to e-commerce giants, PHP continues to power a significant portion of the internet.

9. The PHP Community (Throughout)

One of PHP's strengths is its vibrant and active community. Developers worldwide contribute to its growth by creating libraries, extensions, and documentation. The PHP community fosters knowledge sharing, making it easier for developers to learn and improve their skills.

10. The Future of PHP (Ongoing)

As web technologies continue to evolve, PHP adapts to meet new challenges. Its role in serverless computing, microservices architecture, and cloud-native applications is steadily increasing. The future holds exciting possibilities for PHP in the ever-evolving web development landscape.

In conclusion, PHP's historical journey is interwoven with the evolution of web development itself. From its humble beginnings to its current status as a web development powerhouse, PHP has not only shaped but also continues to influence the internet as we know it. Its versatility, community support, and ongoing evolution ensure that PHP will remain a vital force in web development for years to come.

youtube

#PHP#WebDevelopment#WebDev#Programming#ServerSide#ScriptingLanguage#PHPFrameworks#CMS#ECommerce#WebApplications#PHPCommunity#OpenSource#Technology#Evolution#DigitalLandscape#WebTech#Coding#Youtube

30 notes

·

View notes

Text

Level up your Java expertise! Master advanced concepts to build scalable, robust applications. From web dev to microservices, take your skills to new heights. Enroll now and become a Java pro!

Visit www.advantosoftware.com or call +91 72764 74342 to transform your data into actionable intelligence. Don't just follow trends—set them with Advanto!

3 notes

·

View notes

Text

Top Trends in Software Development for 2025

The software development industry is evolving at an unprecedented pace, driven by advancements in technology and the increasing demands of businesses and consumers alike. As we step into 2025, staying ahead of the curve is essential for businesses aiming to remain competitive. Here, we explore the top trends shaping the software development landscape and how they impact businesses. For organizations seeking cutting-edge solutions, partnering with the Best Software Development Company in Vadodara, Gujarat, or India can make all the difference.

1. Artificial Intelligence and Machine Learning Integration:

Artificial Intelligence (AI) and Machine Learning (ML) are no longer optional but integral to modern software development. From predictive analytics to personalized user experiences, AI and ML are driving innovation across industries. In 2025, expect AI-powered tools to streamline development processes, improve testing, and enhance decision-making.

Businesses in Gujarat and beyond are leveraging AI to gain a competitive edge. Collaborating with the Best Software Development Company in Gujarat ensures access to AI-driven solutions tailored to specific industry needs.

2. Low-Code and No-Code Development Platforms:

The demand for faster development cycles has led to the rise of low-code and no-code platforms. These platforms empower non-technical users to create applications through intuitive drag-and-drop interfaces, significantly reducing development time and cost.

For startups and SMEs in Vadodara, partnering with the Best Software Development Company in Vadodara ensures access to these platforms, enabling rapid deployment of business applications without compromising quality.

3. Cloud-Native Development:

Cloud-native technologies, including Kubernetes and microservices, are becoming the backbone of modern applications. By 2025, cloud-native development will dominate, offering scalability, resilience, and faster time-to-market.

The Best Software Development Company in India can help businesses transition to cloud-native architectures, ensuring their applications are future-ready and capable of handling evolving market demands.

4. Edge Computing:

As IoT devices proliferate, edge computing is emerging as a critical trend. Processing data closer to its source reduces latency and enhances real-time decision-making. This trend is particularly significant for industries like healthcare, manufacturing, and retail.

Organizations seeking to leverage edge computing can benefit from the expertise of the Best Software Development Company in Gujarat, which specializes in creating applications optimized for edge environments.

5. Cybersecurity by Design:

With the increasing sophistication of cyber threats, integrating security into the development process has become non-negotiable. Cybersecurity by design ensures that applications are secure from the ground up, reducing vulnerabilities and protecting sensitive data.

The Best Software Development Company in Vadodara prioritizes cybersecurity, providing businesses with robust, secure software solutions that inspire trust among users.

6. Blockchain Beyond Cryptocurrencies:

Blockchain technology is expanding beyond cryptocurrencies into areas like supply chain management, identity verification, and smart contracts. In 2025, blockchain will play a pivotal role in creating transparent, tamper-proof systems.

Partnering with the Best Software Development Company in India enables businesses to harness blockchain technology for innovative applications that drive efficiency and trust.

7. Progressive Web Apps (PWAs):

Progressive Web Apps (PWAs) combine the best features of web and mobile applications, offering seamless experiences across devices. PWAs are cost-effective and provide offline capabilities, making them ideal for businesses targeting diverse audiences.

The Best Software Development Company in Gujarat can develop PWAs tailored to your business needs, ensuring enhanced user engagement and accessibility.

8. Internet of Things (IoT) Expansion:

IoT continues to transform industries by connecting devices and enabling smarter decision-making. From smart homes to industrial IoT, the possibilities are endless. In 2025, IoT solutions will become more sophisticated, integrating AI and edge computing for enhanced functionality.

For businesses in Vadodara and beyond, collaborating with the Best Software Development Company in Vadodara ensures access to innovative IoT solutions that drive growth and efficiency.

9. DevSecOps:

DevSecOps integrates security into the DevOps pipeline, ensuring that security is a shared responsibility throughout the development lifecycle. This approach reduces vulnerabilities and ensures compliance with industry standards.

The Best Software Development Company in India can help implement DevSecOps practices, ensuring that your applications are secure, scalable, and compliant.

10. Sustainability in Software Development:

Sustainability is becoming a priority in software development. Green coding practices, energy-efficient algorithms, and sustainable cloud solutions are gaining traction. By adopting these practices, businesses can reduce their carbon footprint and appeal to environmentally conscious consumers.

Working with the Best Software Development Company in Gujarat ensures access to sustainable software solutions that align with global trends.

11. 5G-Driven Applications:

The rollout of 5G networks is unlocking new possibilities for software development. Ultra-fast connectivity and low latency are enabling applications like augmented reality (AR), virtual reality (VR), and autonomous vehicles.

The Best Software Development Company in Vadodara is at the forefront of leveraging 5G technology to create innovative applications that redefine user experiences.

12. Hyperautomation:

Hyperautomation combines AI, ML, and robotic process automation (RPA) to automate complex business processes. By 2025, hyperautomation will become a key driver of efficiency and cost savings across industries.

Partnering with the Best Software Development Company in India ensures access to hyperautomation solutions that streamline operations and boost productivity.

13. Augmented Reality (AR) and Virtual Reality (VR):

AR and VR technologies are transforming industries like gaming, education, and healthcare. In 2025, these technologies will become more accessible, offering immersive experiences that enhance learning, entertainment, and training.

The Best Software Development Company in Gujarat can help businesses integrate AR and VR into their applications, creating unique and engaging user experiences.

Conclusion:

The software development industry is poised for significant transformation in 2025, driven by trends like AI, cloud-native development, edge computing, and hyperautomation. Staying ahead of these trends requires expertise, innovation, and a commitment to excellence.

For businesses in Vadodara, Gujarat, or anywhere in India, partnering with the Best Software Development Company in Vadodara, Gujarat, or India ensures access to cutting-edge solutions that drive growth and success. By embracing these trends, businesses can unlock new opportunities and remain competitive in an ever-evolving digital landscape.

#Best Software Development Company in Vadodara#Best Software Development Company in Gujarat#Best Software Development Company in India#nividasoftware

5 notes

·

View notes

Text

How to handle data inconsistencies across microservices?

Scenario: Imagine a shopping application with multiple microservices handling different aspects such as inventory management, order processing, and payment handling. A customer places an order for a product that is in stock, triggering the deduction of the item from the inventory microservice. However, due to network delays or system failures, the order microservice fails to update the order…

View On WordPress

#Best Practices#Compensating transactions#distributed transactions#Eventual Consistency#interview#interview questions#Interview Success Tips#Interview Tips#Java#Microservice Data Inconsistencies#Microservices#programming#Senior Developer#Software Architects#Three-Phase Commit (3PC)#Two-Phase Commit (2PC)

0 notes

Text

NVIDIA AI Blueprints For Build Visual AI Data In Any Sector

NVIDIA AI Blueprints

Businesses and government agencies worldwide are creating AI agents to improve the skills of workers who depend on visual data from an increasing number of devices, such as cameras, Internet of Things sensors, and automobiles.

Developers in almost any industry will be able to create visual AI agents that analyze image and video information with the help of a new NVIDIA AI Blueprints for video search and summarization. These agents are able to provide summaries, respond to customer inquiries, and activate alerts for particular situations.

The blueprint is a configurable workflow that integrates NVIDIA computer vision and generative AI technologies and is a component of NVIDIA Metropolis, a suite of developer tools for creating vision AI applications.

The NVIDIA AI Blueprints for visual search and summarization is being brought to businesses and cities around the world by global systems integrators and technology solutions providers like Accenture, Dell Technologies, and Lenovo. This is launching the next wave of AI applications that can be used to increase productivity and safety in factories, warehouses, shops, airports, traffic intersections, and more.

The NVIDIA AI Blueprint, which was unveiled prior to the Smart City Expo World Congress, provides visual computing developers with a comprehensive set of optimized tools for creating and implementing generative AI-powered agents that are capable of consuming and comprehending enormous amounts of data archives or live video feeds.

Deploying virtual assistants across sectors and smart city applications is made easier by the fact that users can modify these visual AI agents using natural language prompts rather than strict software code.

NVIDIA AI Blueprint Harnesses Vision Language Models

Vision language models (VLMs), a subclass of generative AI models, enable visual AI agents to perceive the physical world and carry out reasoning tasks by fusing language comprehension and computer vision.

NVIDIA NIM microservices for VLMs like NVIDIA VILA, LLMs like Meta’s Llama 3.1 405B, and AI models for GPU-accelerated question answering and context-aware retrieval-augmented generation may all be used to configure the NVIDIA AI Blueprint for video search and summarization. The NVIDIA NeMo platform makes it simple for developers to modify other VLMs, LLMs, and graph databases to suit their particular use cases and settings.

By using the NVIDIA AI Blueprints, developers may be able to avoid spending months researching and refining generative AI models for use in smart city applications. It can significantly speed up the process of searching through video archives to find important moments when installed on NVIDIA GPUs at the edge, on-site, or in the cloud.

An AI agent developed using this methodology could notify employees in a warehouse setting if safety procedures are broken. An AI bot could detect traffic accidents at busy crossroads and provide reports to support emergency response activities. Additionally, to promote preventative maintenance in the realm of public infrastructure, maintenance personnel could request AI agents to analyze overhead imagery and spot deteriorating roads, train tracks, or bridges.

In addition to smart places, visual AI agents could be used to automatically create video summaries for visually impaired individuals, classify large visual datasets for training other AI models, and summarize videos for those with visual impairments.

The workflow for video search and summarization is part of a set of NVIDIA AI blueprints that facilitate the creation of digital avatars driven by AI, the development of virtual assistants for individualized customer support, and the extraction of enterprise insights from PDF data.

With NVIDIA AI Enterprise, an end-to-end software platform that speeds up data science pipelines and simplifies the development and deployment of generative AI, developers can test and download NVIDIA AI Blueprints for free. These blueprints can then be implemented in production across accelerated data centers and clouds.

AI Agents to Deliver Insights From Warehouses to World Capitals

With the assistance of NVIDIA’s partner ecosystem, enterprise and public sector clients can also utilize the entire library of NVIDIA AI Blueprints.

With its Accenture AI Refinery, which is based on NVIDIA AI Foundry and allows clients to create custom AI models trained on enterprise data, the multinational professional services firm Accenture has integrated NVIDIA AI Blueprints.

For smart city and intelligent transportation applications, global systems integrators in Southeast Asia, such as ITMAX in Malaysia and FPT in Vietnam, are developing AI agents based on the NVIDIA AI Blueprint for video search and summarization.

Using computing, networking, and software from international server manufacturers, developers can also create and implement NVIDIA AI Blueprints on NVIDIA AI systems.

In order to improve current edge AI applications and develop new edge AI-enabled capabilities, Dell will combine VLM and agent techniques with its NativeEdge platform. VLM capabilities in specialized AI workflows for data center, edge, and on-premises multimodal corporate use cases will be supported by the NVIDIA AI Blueprint for video search and summarization and the Dell Reference Designs for the Dell AI Factory with NVIDIA.

Lenovo Hybrid AI solutions powered by NVIDIA also utilize NVIDIA AI blueprints.

The new NVIDIA AI Blueprint will be used by businesses such as K2K, a smart city application supplier in the NVIDIA Metropolis ecosystem, to create AI agents that can evaluate real-time traffic camera data. City officials will be able to inquire about street activities and get suggestions on how to make things better with to this. Additionally, the company is utilizing NIM microservices and NVIDIA AI blueprints to deploy visual AI agents in collaboration with city traffic management in Palermo, Italy.

NVIDIA booth at the Smart Cities Expo World Congress, which is being held in Barcelona until November 7, to learn more about the NVIDIA AI Blueprints for video search and summarization.

Read more on Govindhtech.com

#NVIDIAAI#AIBlueprints#AI#VisualAI#VisualAIData#Blueprints#generativeAI#VisionLanguageModels#AImodels#News#Technews#Technology#Technologynews#Technologytrends#govindhtech

2 notes

·

View notes

Text

🌟 What is Apache ZooKeeper?

Apache ZooKeeper is an open-source coordination service designed to manage distributed applications. It provides a centralized service for maintaining configuration information, naming, and providing distributed synchronization. Essentially, it helps manage large-scale distributed systems and ensures they operate smoothly and reliably.

Key Features of ZooKeeper:

Centralized Service: Manages and maintains configuration and synchronization information across a distributed system.

High Availability: Ensures that distributed systems are resilient to failures by providing fault tolerance and replication.

Consistency: Guarantees a consistent view of the configuration and state across all nodes in the system.

Benefits of Using Apache ZooKeeper:

Enhanced Coordination: Simplifies coordination between distributed components and helps manage critical information like leader election, distributed locks, and configuration management.

Improved Fault Tolerance: By replicating data across multiple nodes, ZooKeeper ensures that even if one node fails, the system can continue to function with minimal disruption.

Scalable Architecture: Supports scaling by allowing systems to expand and manage increasing loads without compromising performance or reliability.

Strong Consistency: Provides a strong consistency model, ensuring that all nodes in the distributed system have a consistent view of the data, which is crucial for maintaining system integrity.

Simplified Development: Abstracts the complexities of distributed system coordination, allowing developers to focus on business logic rather than the intricacies of synchronization and configuration management.

Efficient Resource Management: Helps in managing distributed resources efficiently, making it easier to handle tasks like service discovery and distributed locking.

Apache ZooKeeper is a powerful tool for anyone working with distributed systems, making it easier to build and maintain robust, scalable, and fault-tolerant applications. Whether you’re managing a large-scale enterprise application or a complex microservices architecture, ZooKeeper can provide the coordination and consistency you need.

If you’re looking for Apache ZooKeeper consultants to enhance your distributed system's coordination and reliability, don’t hesitate to reach out to us. Our team of specialists has extensive experience with ZooKeeper and can help you implement and optimize its capabilities for your unique needs. Contact us today to discuss how we can support your projects and ensure your systems run smoothly and efficiently.

2 notes

·

View notes

Text

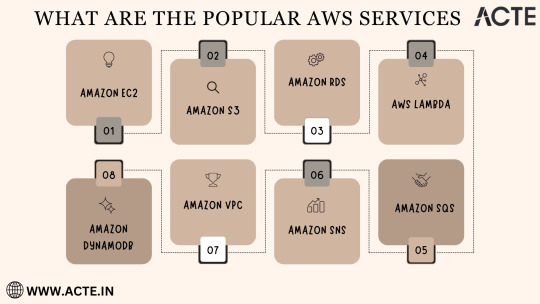

Exploring the Power of Amazon Web Services: Top AWS Services You Need to Know

In the ever-evolving realm of cloud computing, Amazon Web Services (AWS) has established itself as an undeniable force to be reckoned with. AWS's vast and diverse array of services has positioned it as a dominant player, catering to the evolving needs of businesses, startups, and individuals worldwide. Its popularity transcends boundaries, making it the preferred choice for a myriad of use cases, from startups launching their first web applications to established enterprises managing complex networks of services. This blog embarks on an exploratory journey into the boundless world of AWS, delving deep into some of its most sought-after and pivotal services.

As the digital landscape continues to expand, understanding these AWS services and their significance is pivotal, whether you're a seasoned cloud expert or someone taking the first steps in your cloud computing journey. Join us as we delve into the intricate web of AWS's top services and discover how they can shape the future of your cloud computing endeavors. From cloud novices to seasoned professionals, the AWS ecosystem holds the keys to innovation and transformation.

Amazon EC2 (Elastic Compute Cloud): The Foundation of Scalability At the core of AWS's capabilities is Amazon EC2, the Elastic Compute Cloud. EC2 provides resizable compute capacity in the cloud, allowing you to run virtual servers, commonly referred to as instances. These instances serve as the foundation for a multitude of AWS solutions, offering the scalability and flexibility required to meet diverse application and workload demands. Whether you're a startup launching your first web application or an enterprise managing a complex network of services, EC2 ensures that you have the computational resources you need, precisely when you need them.

Amazon S3 (Simple Storage Service): Secure, Scalable, and Cost-Effective Data Storage When it comes to storing and retrieving data, Amazon S3, the Simple Storage Service, stands as an indispensable tool in the AWS arsenal. S3 offers a scalable and highly durable object storage service that is designed for data security and cost-effectiveness. This service is the choice of businesses and individuals for storing a wide range of data, including media files, backups, and data archives. Its flexibility and reliability make it a prime choice for safeguarding your digital assets and ensuring they are readily accessible.

Amazon RDS (Relational Database Service): Streamlined Database Management Database management can be a complex task, but AWS simplifies it with Amazon RDS, the Relational Database Service. RDS automates many common database management tasks, including patching, backups, and scaling. It supports multiple database engines, including popular options like MySQL, PostgreSQL, and SQL Server. This service allows you to focus on your application while AWS handles the underlying database infrastructure. Whether you're building a content management system, an e-commerce platform, or a mobile app, RDS streamlines your database operations.

AWS Lambda: The Era of Serverless Computing Serverless computing has transformed the way applications are built and deployed, and AWS Lambda is at the forefront of this revolution. Lambda is a serverless compute service that enables you to run code without the need for server provisioning or management. It's the perfect solution for building serverless applications, microservices, and automating tasks. The unique pricing model ensures that you pay only for the compute time your code actually uses. This service empowers developers to focus on coding, knowing that AWS will handle the operational complexities behind the scenes.

Amazon DynamoDB: Low Latency, High Scalability NoSQL Database Amazon DynamoDB is a managed NoSQL database service that stands out for its low latency and exceptional scalability. It's a popular choice for applications with variable workloads, such as gaming platforms, IoT solutions, and real-time data processing systems. DynamoDB automatically scales to meet the demands of your applications, ensuring consistent, single-digit millisecond latency at any scale. Whether you're managing user profiles, session data, or real-time analytics, DynamoDB is designed to meet your performance needs.

Amazon VPC (Virtual Private Cloud): Tailored Networking for Security and Control Security and control over your cloud resources are paramount, and Amazon VPC (Virtual Private Cloud) empowers you to create isolated networks within the AWS cloud. This isolation enhances security and control, allowing you to define your network topology, configure routing, and manage access. VPC is the go-to solution for businesses and individuals who require a network environment that mirrors the security and control of traditional on-premises data centers.

Amazon SNS (Simple Notification Service): Seamless Communication Across Channels Effective communication is a cornerstone of modern applications, and Amazon SNS (Simple Notification Service) is designed to facilitate seamless communication across various channels. This fully managed messaging service enables you to send notifications to a distributed set of recipients, whether through email, SMS, or mobile devices. SNS is an essential component of applications that require real-time updates and notifications to keep users informed and engaged.

Amazon SQS (Simple Queue Service): Decoupling for Scalable Applications Decoupling components of a cloud application is crucial for scalability, and Amazon SQS (Simple Queue Service) is a fully managed message queuing service designed for this purpose. It ensures reliable and scalable communication between different parts of your application, helping you create systems that can handle varying workloads efficiently. SQS is a valuable tool for building robust, distributed applications that can adapt to changes in demand.

In the rapidly evolving landscape of cloud computing, Amazon Web Services (AWS) stands as a colossus, offering a diverse array of services that address the ever-evolving needs of businesses, startups, and individuals alike. AWS's popularity transcends industry boundaries, making it the go-to choice for a wide range of use cases, from startups launching their inaugural web applications to established enterprises managing intricate networks of services.

To unlock the full potential of these AWS services, gaining comprehensive knowledge and hands-on experience is key. ACTE Technologies, a renowned training provider, offers specialized AWS training programs designed to provide practical skills and in-depth understanding. These programs equip you with the tools needed to navigate and excel in the dynamic world of cloud computing.

With AWS services at your disposal, the possibilities are endless, and innovation knows no bounds. Join the ever-growing community of cloud professionals and enthusiasts, and empower yourself to shape the future of the digital landscape. ACTE Technologies is your trusted guide on this journey, providing the knowledge and support needed to thrive in the world of AWS and cloud computing.

8 notes

·

View notes